Kafka - Components

1. Introduction to Kafka Components

Kafka is built around a set of key components that work together to provide a powerful distributed messaging and streaming platform. Understanding these components is crucial for designing efficient data processing pipelines and applications.

2. Kafka Brokers

Kafka brokers are the backbone of a Kafka cluster, responsible for storing and managing message data, handling client requests, and coordinating data replication for fault tolerance.

- Data Storage: Brokers store message data on disk, ensuring durability and persistence across the cluster.

- Replication: Brokers replicate data to maintain data redundancy and availability, enabling recovery from failures.

- Leader and Follower Roles: Each partition has a leader broker that handles all read and write requests, while follower brokers replicate data from the leader.

3. Kafka Topics and Partitions

Topics are the core abstraction for organizing and categorizing message streams in Kafka. Each topic is divided into partitions, allowing Kafka to scale and parallelize data processing.

3.1. Topics

Topics are logical categories or feeds to which messages are published. They provide a way to organize data streams within a Kafka cluster.

- Organization: Topics categorize message streams based on their purpose or content.

- Configuration: Topics can be configured with settings such as replication factor and retention period to control data persistence and availability.

3.2. Partitions

Partitions are segments of a topic that allow Kafka to parallelize message processing and distribution. Each partition is an ordered log of messages.

- Parallel Processing: Partitions enable parallel data processing by distributing messages across multiple brokers.

- Load Balancing: Partitions distribute load evenly across brokers, optimizing resource utilization.

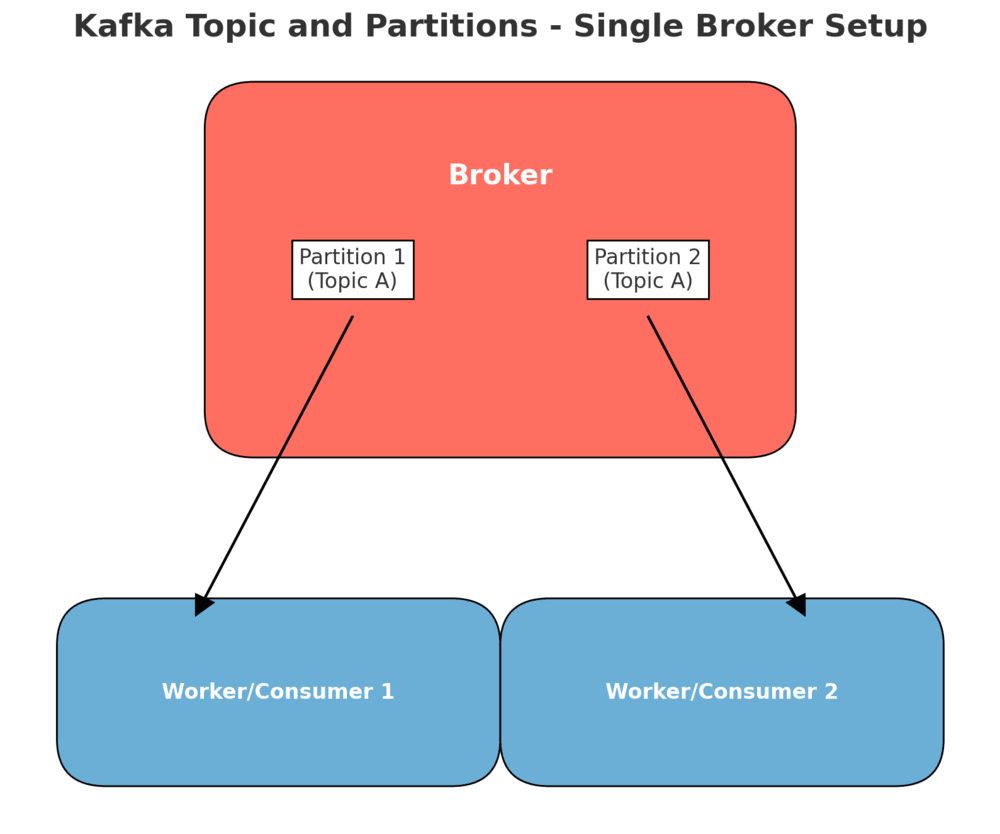

Kafka Topics and Partitions Diagram

The following diagram illustrates the relationship between topics and partitions within a Kafka cluster, highlighting how data is organized and processed.

4. Kafka Producers

Producers are clients that publish messages to Kafka topics. They are responsible for determining which partition a message should be sent to and managing message serialization.

- Message Production: Producers create and send messages to specific topics, ensuring data is available for consumers.

- Partitioning Strategy: Producers can implement custom partitioning logic to optimize data distribution and load balancing.

- Data Compression: Producers can compress messages to reduce network bandwidth and storage requirements.

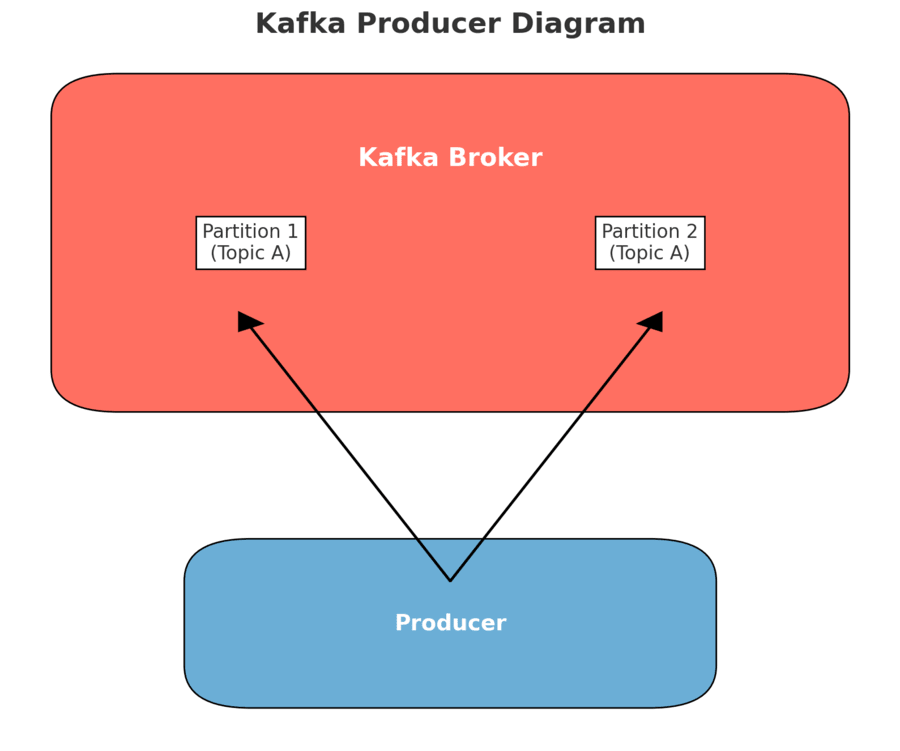

Kafka Producer Diagram

The following diagram illustrates the role of producers in a Kafka cluster, showing how they interact with brokers and topics.

5. Kafka Consumers

Consumers are clients that subscribe to Kafka topics and process incoming messages. They can belong to consumer groups, which enable load balancing and parallel processing.

- Message Consumption: Consumers read messages from topics, processing them for various applications and use cases.

- Consumer Groups: Consumers can be organized into groups to balance the load of message processing across multiple instances.

- Offset Management: Consumers track their position within a partition using offsets, ensuring consistent data processing and no message loss.

6. Kafka KRaft (Kafka Raft)

KRaft (Kafka Raft) is a new consensus protocol introduced in Kafka to manage metadata without relying on Zookeeper. It uses the Raft consensus algorithm to manage partition leadership and cluster metadata.

- Metadata Management: KRaft manages metadata within Kafka, eliminating the need for an external Zookeeper cluster.

- Leader Election: KRaft facilitates leader election for partitions, ensuring data consistency and high availability across the cluster.

- Simplified Operations: KRaft simplifies Kafka operations by consolidating metadata management into the Kafka ecosystem.

Kafka KRaft Diagram

The following diagram illustrates how KRaft operates within a Kafka cluster, managing metadata and facilitating leader election.

7. Notes and Considerations

Understanding Kafka's components and their interactions is essential for designing and managing efficient data processing pipelines. Each component plays a critical role in ensuring Kafka's scalability, fault tolerance, and high throughput capabilities.