Kafka - Consumers

1. Introduction to Kafka Consumers

Kafka consumers are clients that subscribe to Kafka topics and process incoming records. They are essential for consuming data streams, enabling applications to respond to real-time events. This guide explores various consumer techniques, scenarios, and message processing strategies, with examples in C# 12 (.NET 8).

2. Consumer and Partition Interaction

Understanding how Kafka consumers interact with partitions is crucial for designing efficient data processing pipelines. Kafka ensures that each partition is consumed by only one consumer within a single consumer group, maintaining the order of messages and preventing duplication of work. However, multiple consumer groups can independently consume the same partitions, allowing different applications or services to process the same data stream.

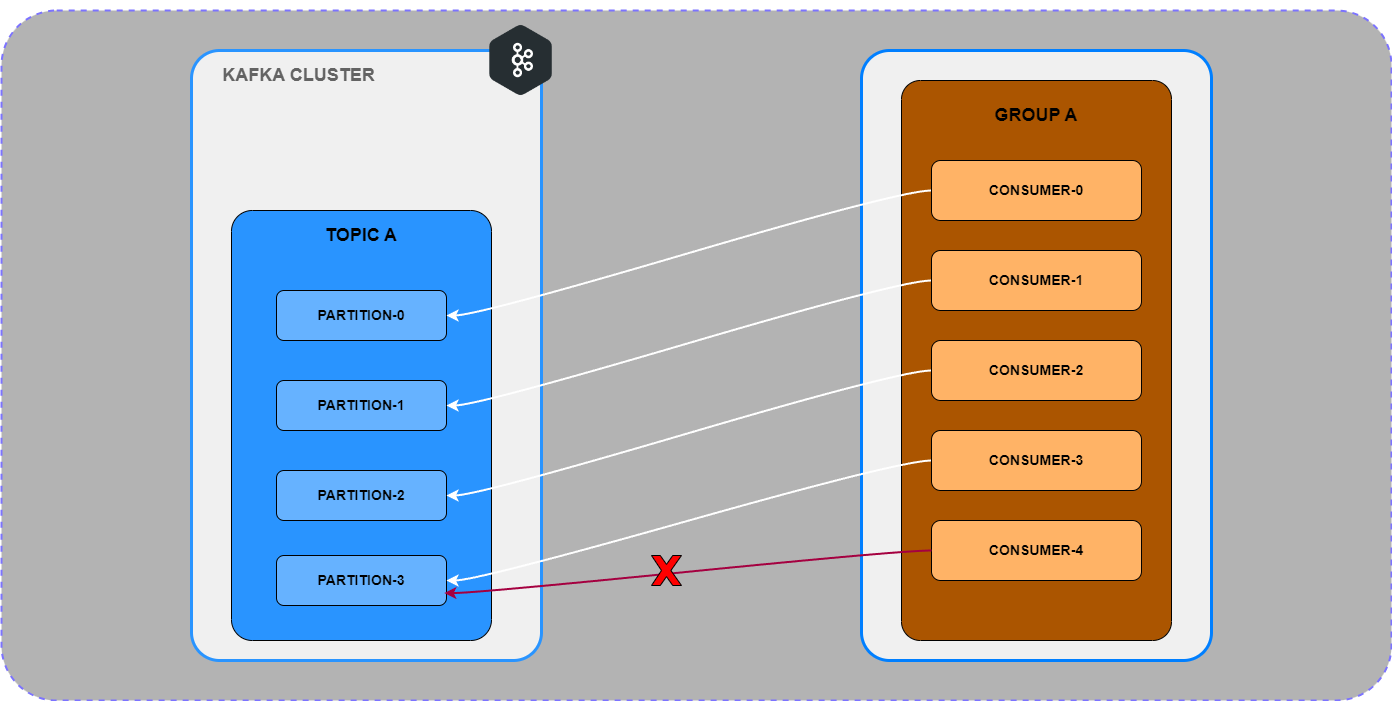

2.1. One Consumer per Partition (in a Consumer Group)

Within a single consumer group, Kafka assigns each partition to only one consumer. This means that no two consumers in the same group will read from the same partition at the same time. This design ensures that messages within a partition are consumed in order and that each message is processed exactly once by the group.

Consumer-Partition Interaction Diagram

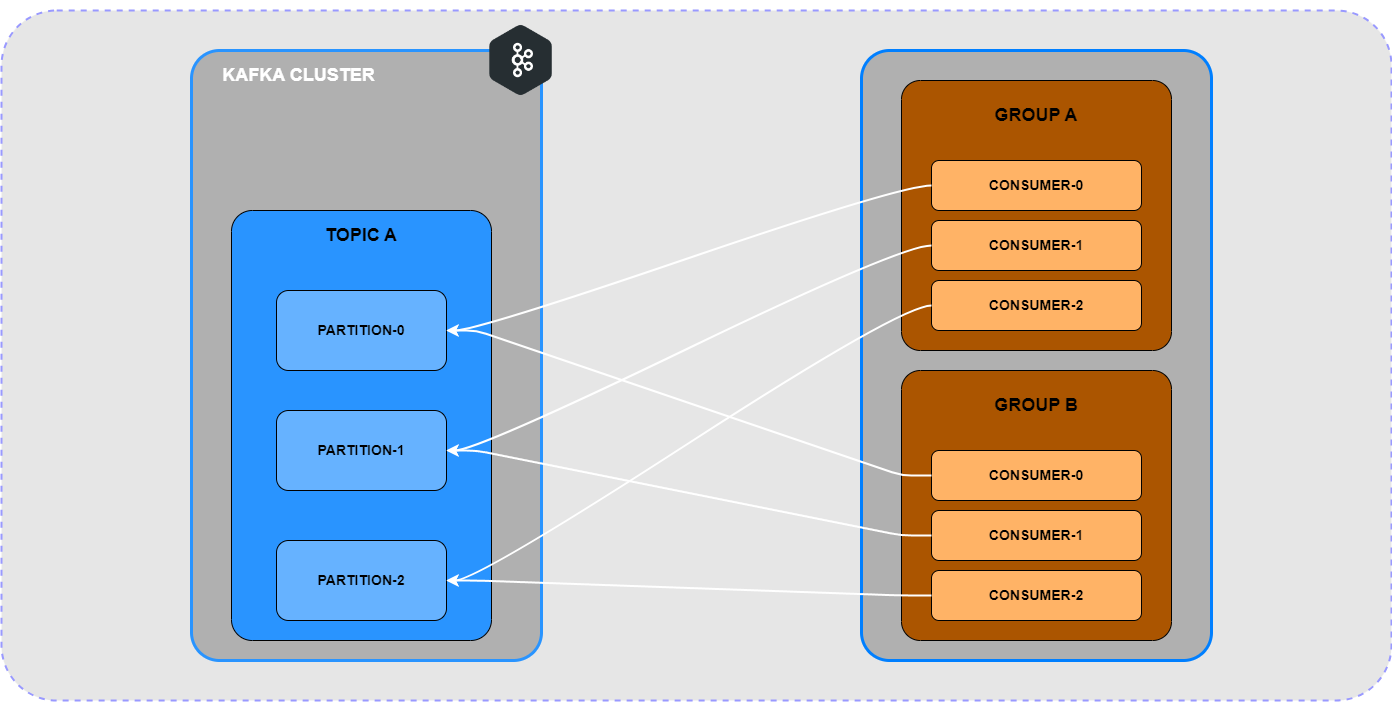

2.3. Multiple Consumers Reading from the Same Partition (Across Different Groups)

While Kafka restricts multiple consumers within the same group from reading the same partition, different consumer groups can independently read from the same partition. This allows multiple applications to process the same stream of data independently, enabling different services to have their own logic and processing workflows.

The following diagram illustrates how Kafka manages consumer and partition interactions, showing how partitions are assigned to consumers within a group and how multiple groups can consume the same partitions:

Group-Consumer-Partition Interaction Diagram

3. Basic Kafka Consumer Workflow

At its core, a Kafka consumer subscribes to one or more topics and reads records from them. Each record is read from a specific partition, ensuring that the consumer processes messages in order.

3.1. Consumer API

The Kafka Consumer API allows you to create a consumer instance, configure it, and consume records. Below is a basic example of initializing a consumer and consuming records in C# 12 (.NET 8):

using Confluent.Kafka;

var config = new ConsumerConfig

{

GroupId = "test-consumer-group",

BootstrapServers = "localhost:9092",

AutoOffsetReset = AutoOffsetReset.Earliest

};

using var consumer = new ConsumerBuilder<string, string>(config).Build();

consumer.Subscribe("test-topic");

try

{

while (true)

{

var consumeResult = consumer.Consume();

Console.WriteLine($"Consumed message '{consumeResult.Value}' at: '{consumeResult.TopicPartitionOffset}'.");

}

}

catch (ConsumeException e)

{

Console.WriteLine($"Error occurred: {e.Error.Reason}");

}

finally

{

consumer.Close();

}4. Consumer Groups and Partition Assignment

Kafka consumers can be organized into consumer groups. Each group ensures that records are processed by only one consumer within the group, allowing for parallel processing and load balancing.

4.1. Consumer Groups

When multiple consumers belong to the same group, Kafka distributes partitions among them, ensuring that each partition is consumed by only one consumer in the group.

4.2. Partition Assignment

Kafka automatically assigns partitions to consumers within a group, balancing the load. However, custom partition assignment strategies can also be implemented for more control.

var config = new ConsumerConfig { GroupId = "custom-group", BootstrapServers = "localhost:9092" };

var consumer = new ConsumerBuilder<string, string>(config)

.SetPartitionsAssignedHandler((c, partitions) =>

{

Console.WriteLine($"Partitions assigned: {string.Join(',', partitions)}");

return partitions;

})

.Build();5. Offset Management

Offsets represent the position of a consumer in a partition. Kafka consumers can manage offsets manually or automatically, which is crucial for ensuring that no records are missed or processed more than once.

5.1. Automatic Offset Management

Kafka can automatically commit offsets after records are consumed, simplifying the consumer logic but at the risk of potential data loss in case of a failure.

var config = new ConsumerConfig

{

GroupId = "auto-offset-group",

BootstrapServers = "localhost:9092",

EnableAutoCommit = true

};5.2. Manual Offset Management

Manual offset management gives more control over when offsets are committed, reducing the risk of data loss but requiring more complex consumer logic.

while (true)

{

var consumeResult = consumer.Consume();

// Process message

consumer.Commit(consumeResult);

}6. Error Handling and Retries

Handling errors and retries is critical in Kafka consumer applications to ensure that message processing is reliable and resilient to failures.

6.1. Handling Deserialization Errors

Errors can occur during deserialization of messages. Kafka allows you to handle these errors gracefully, potentially skipping faulty messages or logging them for further analysis.

var consumer = new ConsumerBuilder<string, string>(config)

.SetErrorHandler((_, e) => Console.WriteLine($"Error: {e.Reason}"))

.Build();6.2. Implementing Retry Logic

Retry logic can be implemented to handle transient errors during message processing, ensuring that messages are not lost due to temporary issues.

while (true)

{

try

{

var consumeResult = consumer.Consume();

// Process message

}

catch (Exception ex)

{

Console.WriteLine($"Processing failed: {ex.Message}. Retrying...");

// Implement retry logic

}

}7. Message Filtering and Transformation

Kafka consumers often need to filter or transform messages before processing them, ensuring that only relevant data is handled by the application.

7.1. Filtering Messages

Messages can be filtered based on criteria such as key, value, or headers, allowing the consumer to skip unnecessary records.

if (consumeResult.Key == "important-key")

{

// Process message

}7.2. Transforming Messages

Message transformation involves modifying the message content before processing, such as converting formats or enriching data.

var transformedValue = JsonConvert.DeserializeObject<MyType>(consumeResult.Value);

// Process transformed message8. Multithreading and Parallel Processing

Kafka consumers can leverage multithreading and parallel processing to handle high-throughput data streams efficiently.

8.1. Using Multiple Consumers

Multiple consumer instances can be run in parallel, each handling different partitions, to increase processing throughput.

var consumer1 = new ConsumerBuilder<string, string>(config).Build();

var consumer2 = new ConsumerBuilder<string, string>(config).Build();

// Run each consumer on a different thread8.2. Using Thread Pools

Thread pools can be used within a single consumer to parallelize message processing across multiple threads.

var threadPool = new ThreadPoolExecutor();

while (true)

{

var consumeResult = consumer.Consume();

threadPool.QueueUserWorkItem(_ => ProcessMessage(consumeResult));

}9. Consuming from Multiple Topics

Kafka consumers can subscribe to multiple topics simultaneously, allowing them to process data from different streams in a unified manner.

9.1. Subscribing to Multiple Topics

Consumers can be configured to subscribe to multiple topics by providing a list of topic names.

consumer.Subscribe(new[] { "topic1", "topic2", "topic3" });9.2. Processing Messages from Different Topics

When consuming from multiple topics, the consumer can handle messages differently based on the topic they originate from.

while (true)

{

var consumeResult = consumer.Consume();

if (consumeResult.Topic == "topic1")

{

// Process message for topic1

}

else if (consumeResult.Topic == "topic2")

{

// Process message for topic2

}

else

{

// Process message for other topics

}

}

10. Advanced Consumer Configurations

Kafka consumers offer various advanced configurations that allow fine-tuning for specific use cases, improving performance, reliability, and resource utilization.

10.1. Consumer Lag Monitoring

Consumer lag, the difference between the last produced message and the last consumed message, is a critical metric for ensuring that consumers keep up with the data stream.

var lag = consumeResult.TopicPartitionOffset.Offset - consumeResult.HighWatermark.Value;

Console.WriteLine($"Consumer lag: {lag}");10.2. Configuring Polling Interval

The polling interval, controlled by the PollIntervalMs setting, determines how frequently the consumer checks for new messages. Adjusting this can optimize consumer responsiveness and resource usage.

var config = new ConsumerConfig { PollIntervalMs = 100 }; // Poll every 100ms11. Monitoring and Metrics

Monitoring Kafka consumers is essential for ensuring they are operating efficiently and reliably. Kafka provides built-in metrics that can be used to monitor consumer performance.

11.1. Key Metrics

Important metrics to monitor include:

- Consumer Lag: Tracks how far behind the consumer is from the latest data.

- Rebalance Events: Measures the frequency and duration of partition rebalances.

- Memory Usage: Monitors the memory consumption of the consumer application.

- Processing Time: Tracks the time taken to process each message.

11.2. Monitoring Tools

Several tools can be used to monitor Kafka consumer metrics, including:

- Prometheus and Grafana: Commonly used for visualizing Kafka metrics.

- Confluent Control Center: A management and monitoring tool specifically for Kafka.

- Datadog: A cloud-based monitoring platform that integrates with Kafka.

12. Best Practices and Optimizations

Following best practices when configuring and using Kafka consumers can significantly improve performance, reliability, and maintainability.

- Optimize Polling: Adjust the polling interval and batch size to balance throughput and latency.

- Monitor Consumer Lag: Regularly monitor consumer lag to ensure that your consumers are keeping up with the data stream.

- Handle Errors Gracefully: Implement robust error handling and retry logic to manage transient failures and prevent data loss.

- Use Consumer Groups Wisely: Group consumers strategically to optimize load balancing and parallel processing.

- Monitor Metrics: Regularly monitor consumer metrics to detect performance bottlenecks and failures early.

13. Summary

Kafka consumers are a critical component in processing data streams efficiently. By understanding and utilizing the various techniques, scenarios, and configurations available, you can optimize message consumption, ensure data integrity, and achieve high performance in your Kafka-based applications.